Minor change logs for release version 11.3.1

Improvements

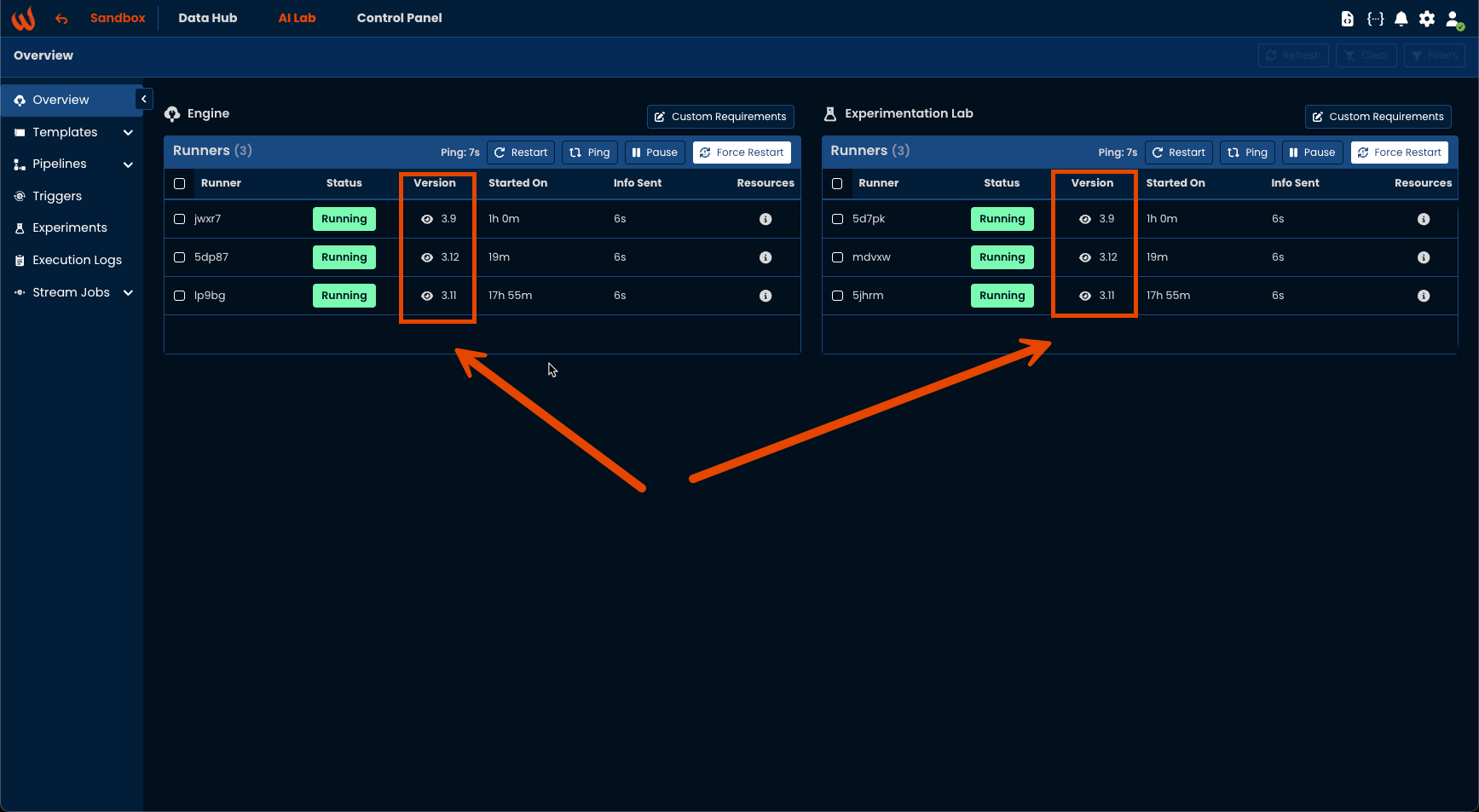

- Add support on pipeline image for python multi-version

- Add support to choose the right python version for pipeline edge

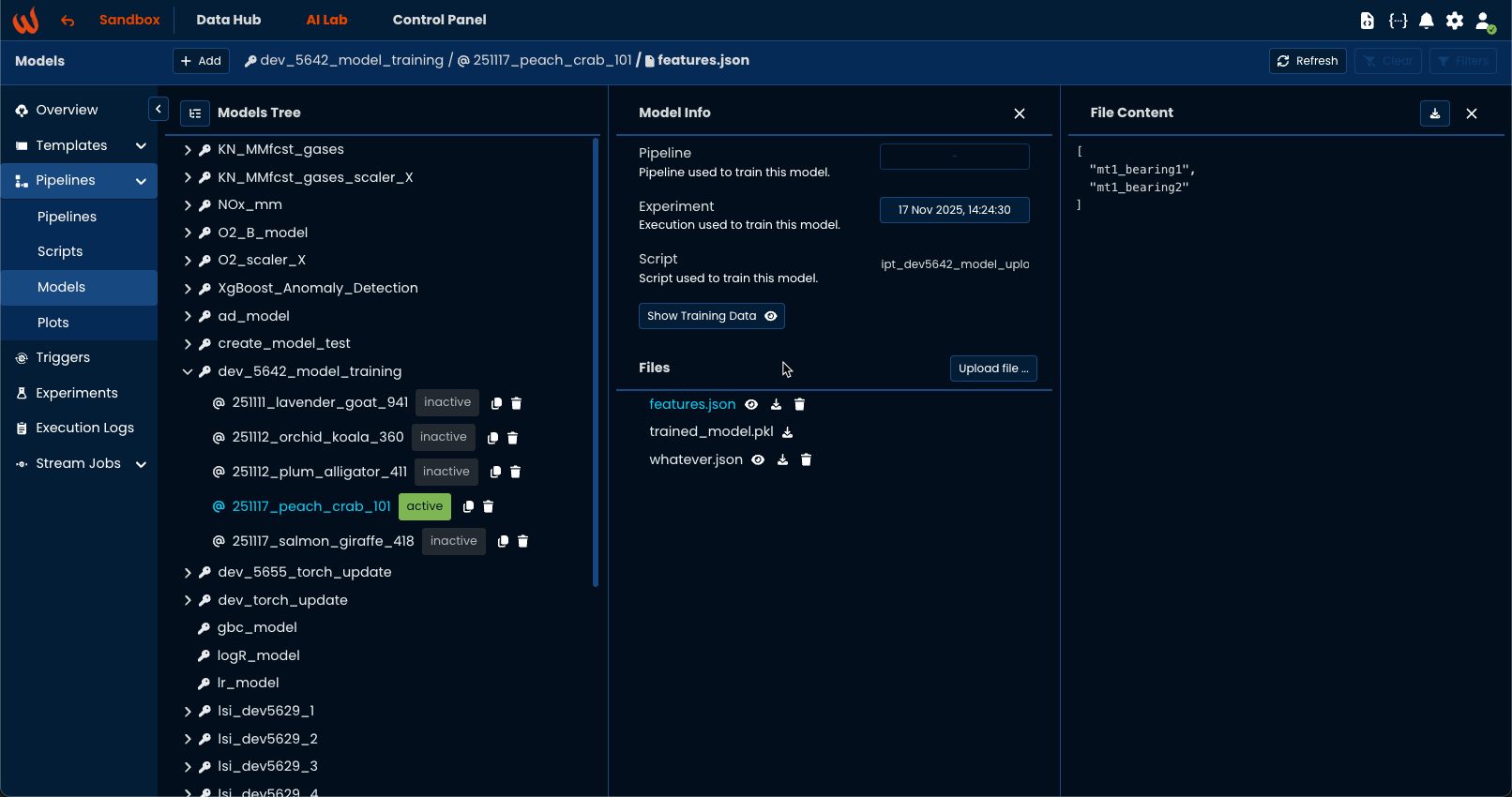

- Add choosing model training features from a json file inside a pipeline

- Improve error messages when model cannot be trained on inference pipeline

- Add checkbox to choose train/plot/write options on experiment

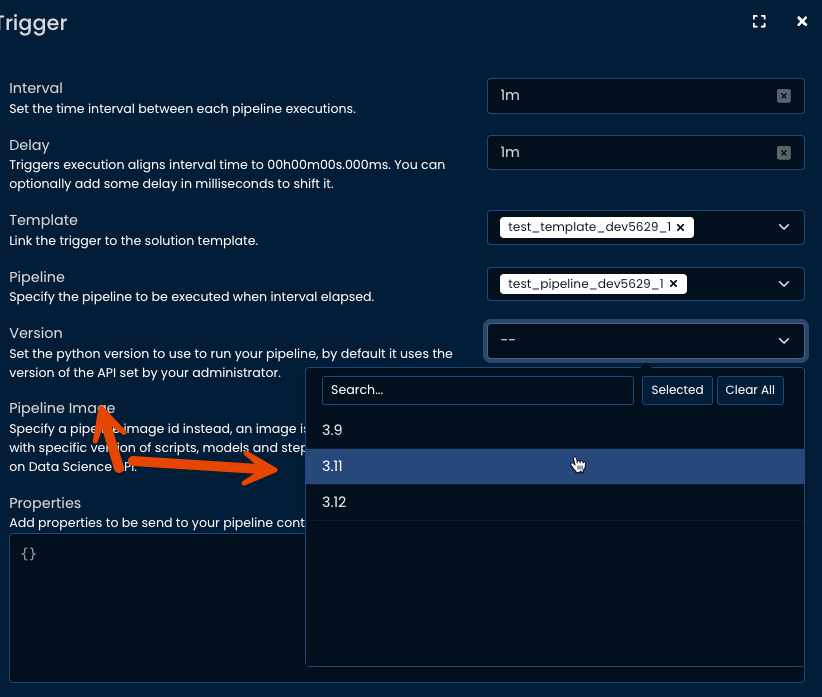

- Adding display of python default version on Trigger and Experiment execute page

Bug Fixes

- Fix pipeline queuing management by trigger services with new python multi-version

- Add proper displaying of python version used on execution logs

- Fix issues collision on left menu collapse arrow on twin selector

- Fix left menu button collapse arrow unclickable on some cases

- Fix a sizing issue on bottom of models page

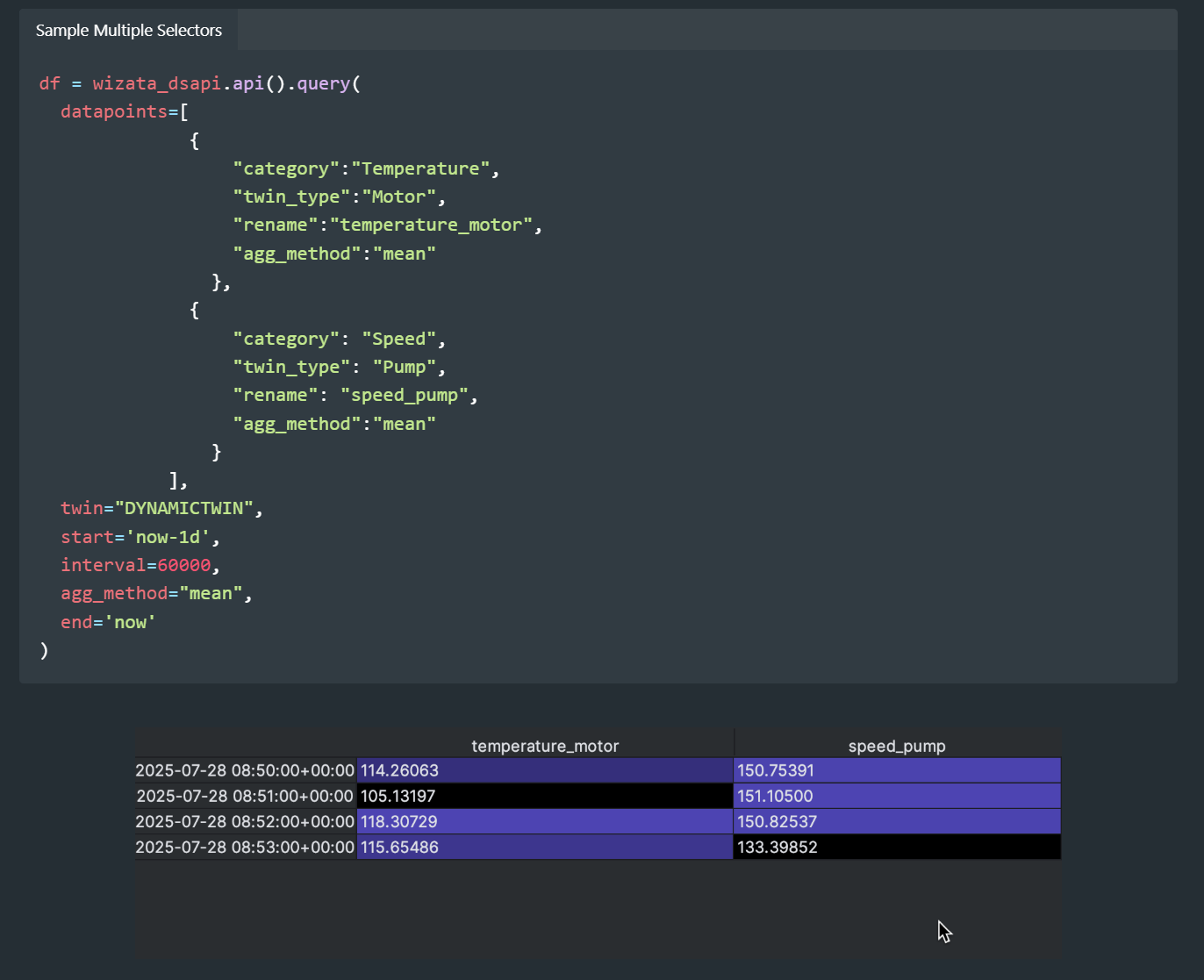

- Fix an issue where datapoints are not filtered properly on python toolkit

- Fix an issue selecting the proper python version when executing a pipeline directly from API

- Fix an issue with datapoints deselection after being attached to a twin on UI

- Fix an issue on some twins being created at root page when a parent is not assigned