Design your Event/Batch solution

The first step to deal with event in Wizata is to design the solution within the data hub as twins and datapoints.

With time-series data you can natively and easily query and store data based on time dimension. While being very useful it is not sufficient to apply to all cases, within Wizata you can also query and store data corresponding to events.

Events & Batches solution allow you to associate names or IDs to data. It is useful for many cases such as e.g. :

- Track batches over different assets during process.

- Label anomalies or incidents.

- Annotate time and data relevant to train a model.

In this article you will learn:

- What is an event

- To define a group system for your solution

- To define your datapoints of type event

- To link them together with the data through the digital twin, so it is considered associated to all datapoints to same twin and its children

You can follow-up with the related articles:

- Define the proper ingestion rules for your data to be stored correctly, see Understanding Time-Series DB Structure and Learn how to upload event data

- Use advanced queries to retrieve all data linked to specific events

Sample

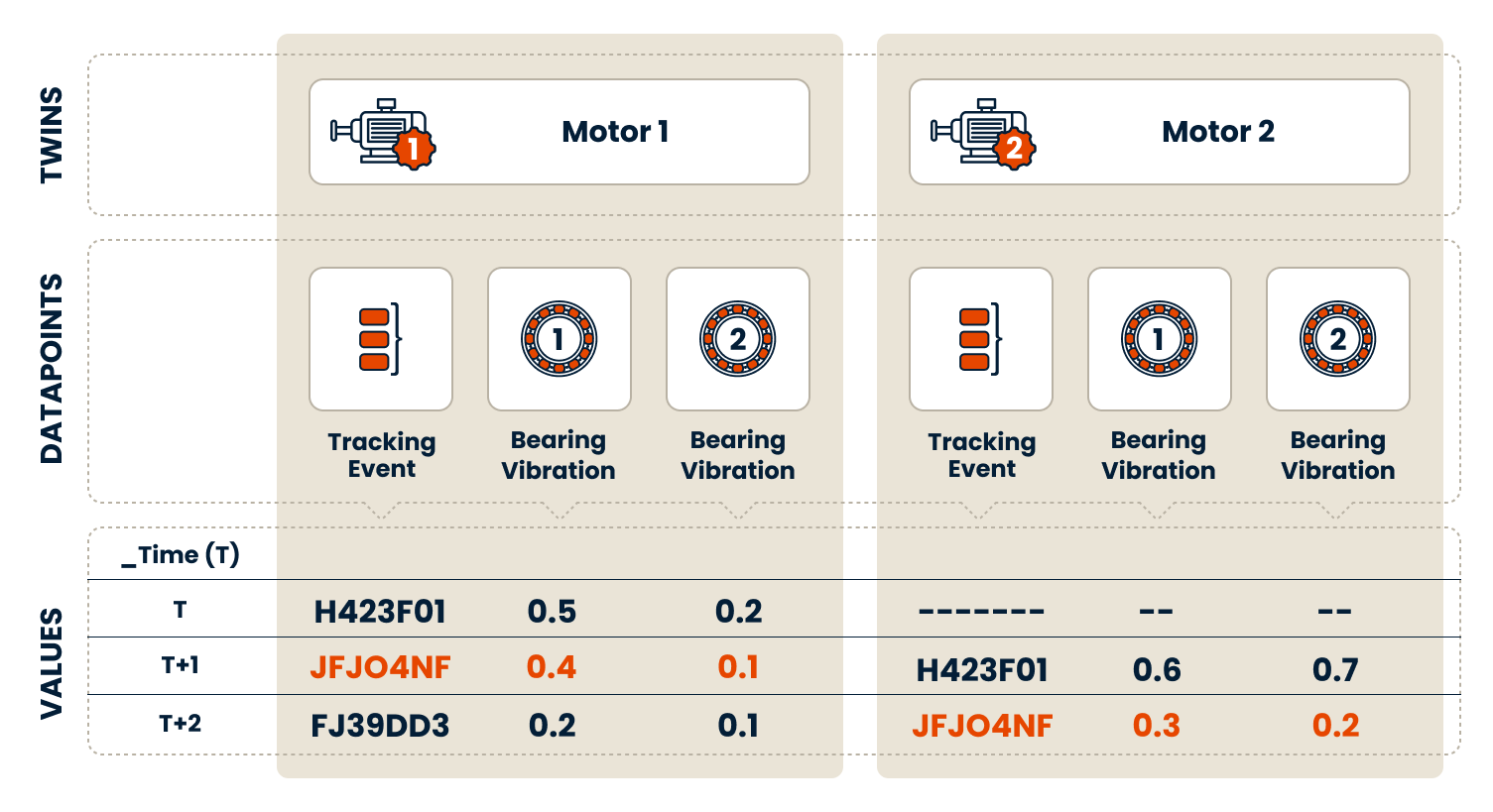

Let's explore an example where we want to get data from telemetries for a specific batch/event id regardless of the timestamp. This example will be used across the documentation to illustrate the concept.

Extending our example outlined in the Twins article, let's imagine we have two motors containing sensors that measure bearing vibrations. We can design a solution to detect the vibration data from our bearings at an exact time for a particular batch id.

The schema below illustrates a simple example designed to track information for an event with ID JFJO4NF during its transition from the first to the second process (Highlighted in orange)

What is 'Event' related data

Event data tracks when, what and where something happening. An event have a starting timestamp identified by an 'Event Status' On and end timestamp by Off both associated with an Event ID.

For example, an event can be a batch processing log, where each row links a specific batch ID to the machine (process) that handled it and its respective start and stop timestamps.

Here is an example of a batch processing log:

| Event ID | Event Status | Machine | Timestamp |

|---|---|---|---|

| H423F01 | On | Motor 1 bearings | 2024-11-13T00:00:00+00:00 |

| H423F01 | Off | Motor 1 bearings | 2024-11-13T01:48:31+00:00 |

| H423F01 | On | Motor 2 bearings | 2024-11-13T01:48:31+00:00 |

| H423F01 | Off | Motor 2 bearings | 2024-11-13T03:22:31+00:00 |

| JFJO4NF | On | Motor 1 bearings | 2024-11-13T01:48:31+00:00 |

| JFJO4NF | Off | Motor 1 bearings | 2024-11-13T03:54:31+00:00 |

For instance, on December 13th, 2024 between 00:00:00 and 01:48:31, the batch with ID H423F01 was being handled by the bearings of motor 1 (first and second row of the table) . While H423F01 transitioned to the second process, handled by the bearings of motor 2, a new batch with ID JFJO4NF began processing on the bearings of the motor 1 (last two rows of the table).

This is brief preview of the event sample data that will be uploaded in the next article. However, we first need to define a group system.

Check How to upload event data and Time-Series DB Structure to learn how to deal with event data structure and import/connectivity.

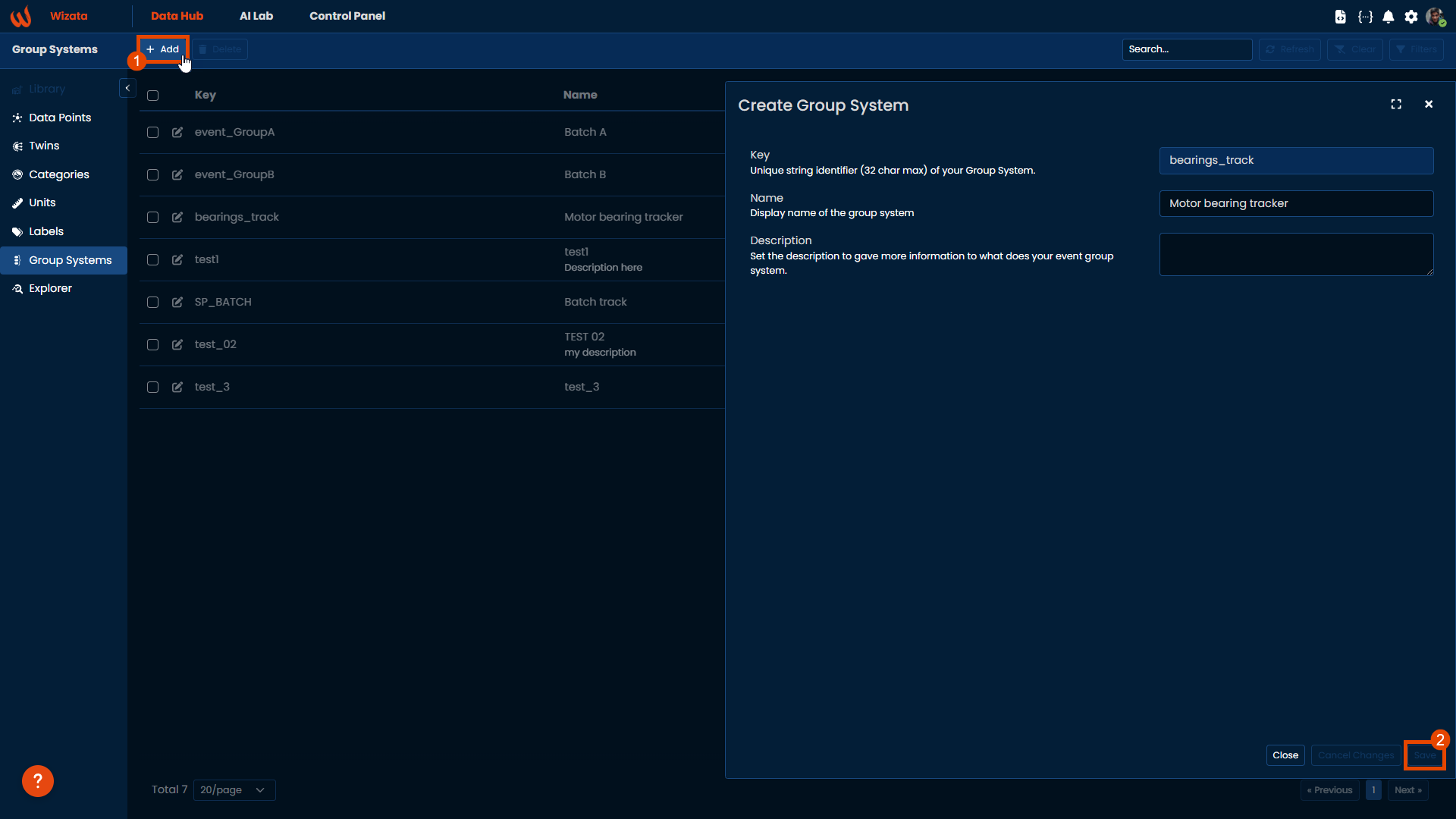

Group System

Events might be of different kind ; anomalies, tracking batch number, alarm, working shifts, etc. ... therefore the Group System associates them together.

A group system is mandatory inside your query to retrieve all data associated to your event.

In our example, let's create a group system named 'bearings_track', which will link our tracking batch number data with the motor bearings twins and datapoints, to monitor the batch process on the motor bearings.

Alternatively, we can also create the group system using DSAPI with this simple script

group_system_object = wizata_dsapi.GroupSystem(

key="bearings_track",

name="Motor bearings tracker",

description=""

)

wizata_dsapi.api().create(group_system_object)We also have a technical documentation for the Group System object in our Python SDK documentation.

Datapoints

To track your event you need a datapoints of type event, with two Event Status one On identifying the start and another Offfor the stop itself.

To follow the example from the table, as we willupload our data via Event Hub, a datapoint of business type Event will be autogenerated, and will automatically send data of an EventID H423F01 with two messages: one On at _2024/11/13 00:00 and one Off at _2024/11/13 01:48__.

You can find more details about Event business type datapoints in the following documentation article.

Twins

Events and data-related within your solution (aka. group system) are associated through the digital twin. If done properly, then all event IDs On/Off period can be used to selected the data related on non event datapoints.

All telemetries datapoint can be associated to an event by being associated to the same twin tree :

- A non event datapoint is associated to the event datapoint if on the same twin.

- OR a parent of that twin (including grandparents, etc.)

- BUT An event datapoint for the same group system must be unique on the twin tree.

In summary, within one group system, if possible for a non event datapoint to be linked to one and only event datapoint without confusion following the twin parent structure then it can be used to associate the Event IDs.

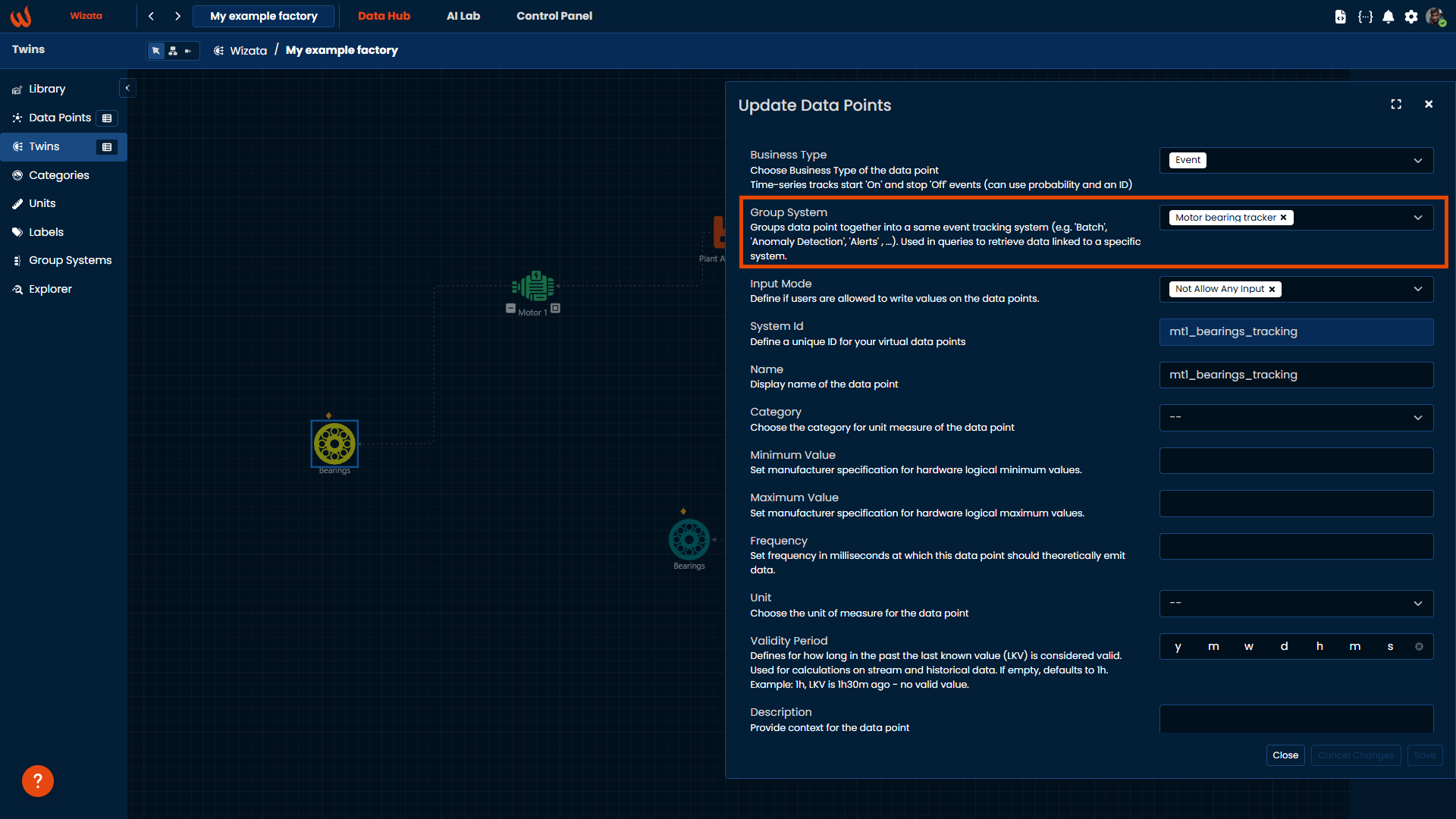

Associate the event datapoint to the twin

We will first need to link our Event datapoint to a twin, and then make sure all other datapoints are associated to the same twin or a children of this one.

Linking our Event datapoints to their related twin follows the same logic as we previously did with other business types datapoints, by drag and dropping the desired Event datapoints into their respective Twin.

In order to attach our Event datapoint to the bearings_tracker group system, we will need to edit the metadata of those Event datapoints in the platform.

With DSAPI you can also automate this process as well, by getting the ID of your created group system and attach it to the desired event datapoints object. In the following script, we will attach all event datapoints containing _bearings_tracking using a simple loop logic:

datapoint_partial_name = '_bearings_tracking'

i = 1 # Page number

page_size = 20 # Number of datapoints per page

total = None

has_more = True

group_system_object = wizata_dsapi.api().get(key="bearings_track", entity=wizata_dsapi.GroupSystem)

while has_more:

datapoints = wizata_dsapi.api().search_datapoints(hardware_id=datapoint_partial_name, page=i, size=page_size,

business_types=['event'])

if i == 1:

total = datapoints.total

for datapoint in datapoints.results:

datapoint.group_system_id = group_system_object.group_system_id

wizata_dsapi.api().upsert_datapoint(datapoint)

# Exit condition if no more datapoints to update

total -= page_size

i += 1

if total < 0:

has_more = FalseThen we will need to have the information associated to the event as normal telemetry datapoint. Those additional datapoint should be linked to the same or child digital twin item than the one associated to the Event one.

In our example, themt1_bearings_tracking event datapoint was attached to the parent twin (the motor) instead of the child twin (the bearings)

If you are not familiar yet, you can read more about parent-child hierarchy in this article: Twins: Parent-child hierarchy

Updated 4 months ago

In the next article, we will show you how to upload event related data to the platform