Query

A Query formalizes the process of retrieving data from the time-series database using constraints such as digital twin, time, aggregation, and/or filtering. You can fetch data using a Request object, which returns fully formatted Pandas dataframe.

You will notice that the concept of a query is also discussed in the Pipeline section, as a query can also function as a pipeline Step.

Structure

Request format use a logical JSON based format wrapped into wizata_dsapi.Request entity.

It contains different key elements:

- datapoints determines which time-series values need to be yield by the query. Alternatively or in combination of digital twin and template consideration for a dynamic selection.

- timeframe can be fixed or relative and helps determining the time range to select.

- aggregations determines how rows are aggregated together within the selection : e.g. which function is used and interval.

- filters can be used to filter out some records using simple conditions.

- bucket(optional, for InfluxDB 2.x) can be used to choose among different buckets available.

- group can be used to group data on specific event system such as batch or anomalies

- options are used to alter the behavior of the query to apply pre or treatment ; e.g. how to handle null values.

- field non required for numeric time-series, by default to value. It determines which information is return by the query.

Important to have a good understanding at Time-Series DB Structure and different Data Points concepts such as business types

Generally, datapoints, timeframe and aggregations are required for a simple query to select specific time-series within a certain timeframe and returning the values aggregated with a certain function and interval.

Sample Query

Using the following logic, we will be able to fetch the information from datapoints in the platform. A query can be send either using the Python Toolkit or using the API.

For our case on the bearing datapoints from the motor sensor, we will retrieve the data from all the bearings attached to our motor 1, adding a filter for the bearing_sensor1 values that are higher than 0.060.

Here's example with Python or with the API:

from datetime import datetime

import wizata_dsapi

df = wizata_dsapi.api().query(

datapoints=['mt1_bearing1', 'mt1_bearing2', 'mt1_bearing3', 'mt1_bearing4'],

start=datetime.strptime("2024-08-29", "%Y-%m-%d"),

end=datetime.strptime("2024-08-30", "%Y-%m-%d"),

agg_method="mean",

interval=600000,

filters={

"mt1_bearing1": {

">": 0.060

}

}

){

"datapoints": [

"mt1_bearing1",

"mt1_bearing2",

"mt1_bearing3",

"mt1_bearing4"

],

"timeframe": {

"start": 1724889600000,

"end": 1724976000000

},

"aggregations": {

"agg_method": "mean",

"interval": 600000

},

"filters": {

"mt1_bearing1": {

">": 0.060

}

}

}You can also use a JSON file to define your query and parse it from Python. In our case, we will store the JSON with the name bearing_query.json. You will use the following code to parse and execute your query:

query = wizata_dsapi.Request()

file = open("bearing_query.json")

query.from_json(json.load(file))

df = wizata_dsapi.api().get(query)Query parameters

Datapoints

You can define the datapoints you want to select directly from their hardware ID or using a template.

To use datapoints simply provide a list of str corresponding to Hardware ID in the platform.

df = wizata_dsapi.api().query(

...

datapoints=['mt1_bearing1', 'mt1_bearing2', 'mt1_bearing3', 'mt1_bearing4'],

...

)You can specify the key identifier of a solution template to fetch datapoints.

If you are querying data directly you should also pass the hardware ID of an asset registered on the template. Inside a pipeline, the hardware ID of the asset is passed at runtime.

df = wizata_dsapi.api().query(

...

template='example_template',

twin='example_factory',

...

)By default, query will return all properties of type ‘datapoint’ from your template. You can use datapoints to a pass a list of template properties to select.

df = wizata_dsapi.api().query(

...

template='example_template',

twin='bearing_sensor1',

datapoints=['mt1_bearing1'],

...

)In addition, you can use a dynamic list of datapoints stored as a JSON property of your template :

- Add a template property of type JSON

- Add a registration and on the value use a JSON list of hardware IDs

- On the query using template, simply use the name of the template property in the datapoints list

Timeframe

There different way to select timeframe within a request.

You can use a static value of “datetime” format, using epoch integer in JSON (ms) and a datetime in python:

df = wizata_dsapi.api().query(

...

start=datetime.strptime("2023-01-01", "%Y-%m-%d"),

end=datetime.strptime("2023-07-17", "%Y-%m-%d"),

...

)"timeframe": {

"start": 1682035200000,

"end": 1682294400000

},Alternatively, you can use the format “relative” to specify the start and end value. “relative” is a str based on “now” plus or minus or a delay.

Format is now then + or - then aninteger value then aunit ( y, M, d, h, m, s, ms ).

For example:

start="now-1d"andend="now"to extract last day datastart="now-2M"andend="now"to extract last two months data

df = wizata_dsapi.api().query(

...

start="now-1d",

end="now",

...

)

Year are corresponding to a fix period of 365 days, and Month to a fix period of 30 days.

Group

As previously mentioned, you can query grouped data based on a specific event system. To do this, you simply need to provide a group system ID/key and a list of event IDs.

df = wizata_dsapi.api().query(

...

group={

"system_id": "bearings_track",

"event_ids": ["FE303FV", "H423F01"]

}

...

)

We have a dedicated page explaining Event business type and how to query batch datapoints with a sample data demonstration.

Aggregation

When fetched from platform all data are aggregated following an aggregation method and an interval.

Interval

The interval is a fixed number representing milliseconds.

Additionally, we can set interval to

None. This will be useful when fetching for non-aggregated data like Text data point

Please adapt your interval to the period fetched to avoid any performance issue within your environment.

Method

The agg_method is a str representing the method used to aggregate the data. By default, query will use ‘mean’ if not specified differently.

The query accept the following methods :

- mean

- stddev

- mode

- median

- count

- sum

- first

- last

- max

- min

e.g. using ‘count’ for a 1 min interval.

df = wizata_dsapi.api().query(

...

agg_method="count",

interval=60000,

...

)Raw data support

For a detailed and more-precise analysis, we can create queries to fetch for raw data using DSAPI.

In order to achieve it, we simply need to change the agg_method value to None

df = wizata_dsapi.api().query(

...

agg_method=None,

...

)The response dataframe from a raw data query will have a different index format than queries with aggregation methods.

Filters

Filters can be used to filter a specific datapoint based on its value.

Filters are cumulative and can do simple comparison such as:

- ‘<’ less than

- ‘<=’ less or equal than

- ‘=’ equal

- ‘!=’ different

- ‘>’ greater than

- ‘>=’ greater or equal than

Filters compared the datapoint value to a fixed float value. It consists of a dict where key is the name of the datapoint/property and value is another dict based on operator for the key and float as value.

Filters must apply do a datapoint selected within the request. In case you use a template, instead use template property name.

Options

Options can be used in order to influence the query behavior.

They are passed if necessary inside the options as a dictionary.

df = wizata_dsapi.api().query(

...

options={

'null' : 'all',

'fill_behavior' : 'interpolation',

'auto_fill_set_points' : False

}

...

)null

null option tell query how to deal with missing values. It is executed post-aggregation. By default, set to ‘drop’ from experiment and ‘ignore’ on query from code;

- drop: drop all rows that contains at list one missing values.

- all: drop all rows only if all columns are missing values.

- ignore: do not drop any rows and ignore missing values.

fill_behavior

fill_behavior is executed after “null” and tell query how to complete any missing value left. By default, set to ‘ignore’;

- ignore: leave missing values untouched.

- forward: using ffill function to fill values with the previous known value.

- back: using bfill function to fill values with the next known value.

- interpolation: using AI to try interpolate the missing values.

auto_fill_set_points

auto_fill_set_points accepts True or False boolean value. By default, set as True. System will consider any last known value as active for any datapoint of type Set Point and will fill all missing values with it until a new value is found. Set it to False to disable that behavior.

Field

By default, the query is returning the value field from time-series.

But you can retrieve others fields, based on existing time-series data - format & types. To do so, you need to add the parameter field to your query. The field parameter can accept a str or a list of str.

df = wizata_dsapi.api().query(

...

field=['valueStr', 'value'],

...

)df = wizata_dsapi.api().query(

...

field='valueStr',

...

)Fields can be of float or text type;

- If you attempt to retrieve a field that is not numerical, therefore your dataframe will not be formatted

- If you retrieve multiple numerical fields the dataframe will contain a multi-index using the name of the field.

df = wizata_dsapi.api().query(

datapoints=['dp_a','dp_b'],

...

field=['reliability', 'value'],

...

)

df['dp_a']['reliability']Batch query

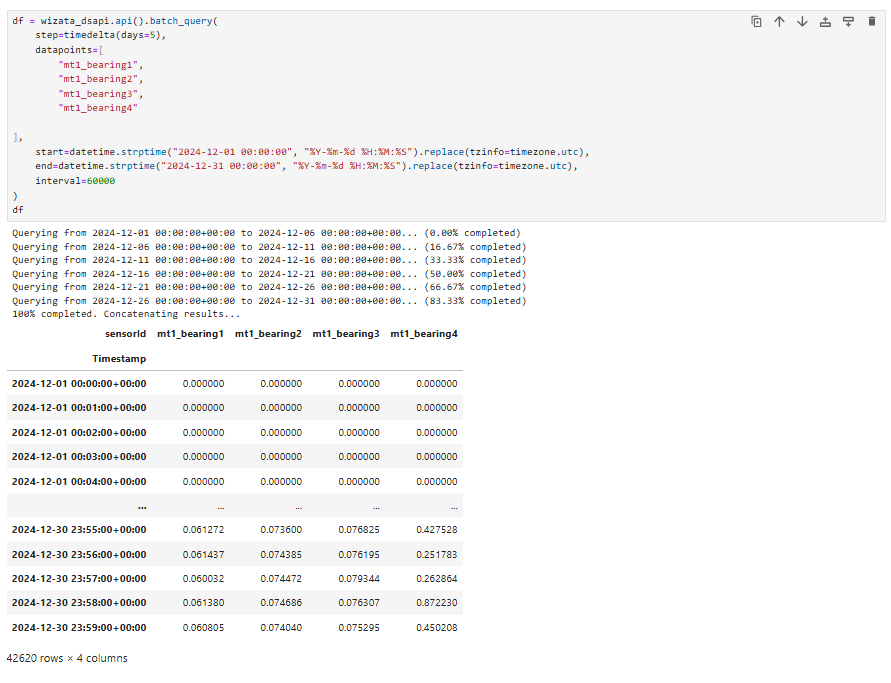

When you need to retrieve large amounts of data, you can use the batch_query() method to split your request into smaller time chunks, reducing the load on the system and avoiding query timeouts.

Unlike a standard query(), batch queries include an additional step parameter, defined as a time_delta, which determines the size of each time segment to fetch. This approach is ideal when working with large historical datasets.

For example, you can query one month of motor bearing data using 60-second intervals, split into 5-day batches, with the following logic:

from datetime import timedelta, timezone, datetime

df = wizata_dsapi.api().batch_query(

step=timedelta(days=5),

datapoints=[

"mt1_bearing1",

"mt1_bearing2",

"mt1_bearing3",

"mt1_bearing4"

],

start=datetime.strptime("2024-12-01 00:00:00", "%Y-%m-%d %H:%M:%S").replace(tzinfo=timezone.utc),

end=datetime.strptime("2024-12-31 00:00:00", "%Y-%m-%d %H:%M:%S").replace(tzinfo=timezone.utc),

interval=60000 # 60 seconds

)

print(df)

Note: Use

verbose=Falseif you want to suppress printed output.

Updated 4 months ago